Internet2 cloud solutions give you direct access to major cloud providers and secure, convenient single sign-on to services. Our solutions are safe, secure, and scalable.

Cloud

Strategic Cloud Service Decision-Making

The Cloud Scorecard is a self-assessment completed by the vendor using standards and best practices specific to the research and education community.

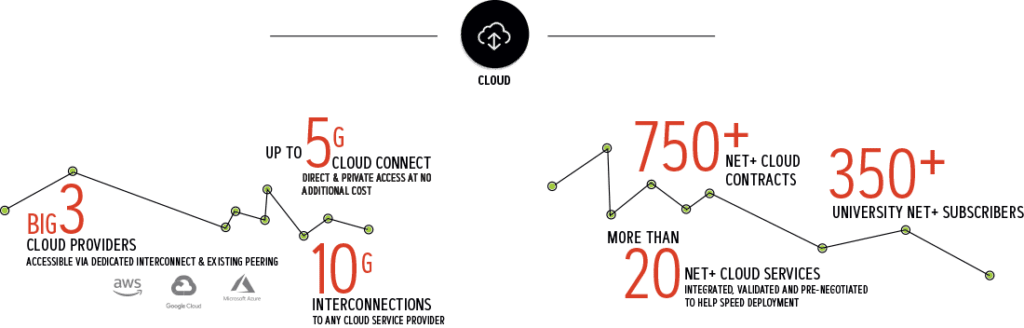

Cloud Access

When moving infrastructure to the cloud, organizations need speed, capacity, reliability, and security. Internet2 solutions, developed by and for research and education, can help you deliver.

Community-vetted Cloud Services

How do you know what will work best for your campus? Start with the NET+ services evaluated by your colleagues, specific to research and education.

High-performance Access to Commercial Content Destinations

The Internet2 Peer Exchange (I2PX) provides high performance, low latency, and efficient access to top commercial content destinations like Google, Yahoo, Netflix, and others.

Access Control and Security

However you connect to cloud providers, access control and security are essential. Through InCommon, Internet2 offers secure single sign-on, plus a suite of identity and access management tools developed by the community specifically for research and education.

CLASS Training and Development

Cloud Learning and Skills Session (CLASS) provides training and workforce development programs to help institutions an, IT organizations supporting research and research groups.

Quick Links

News

Check out the latest Cloud news and blogs by technical staff and our community.

Community Groups

Come be a part of the community! Join a cloud community group or read the latest from working groups.

Case Study

Read “Universities and Google Collaborate to Create Google Cloud Platform” case study, featuring Boston University, Indiana University, Michigan State University, the University of Washington and Washington University of St. Louis.

Case Study

Read how universities worked through Internet2 NET+ Amazon Web Services to remove cloud computing cost constraints to enhance scientific collaboration and application development.

Community Collaboration

Internet2 Events

Registration is open for TechEX25, slated for Dec. 8-12 in Denver, Colo.

View slides from the 2025 Community Exchange.

We want to hear from you.

Contact us for more information.